The computer science team at the University of Jena is developing robust artificial intelligence (AI) models that enable automated image analysis and species identification of moths and other insects. These AI models will efficiently process the images from the project’s Automated Recorders for Nocturnal Insects (ARNI) and reliably identify the moths captured. This is a challenge, as there are more than 1,100 species of macro moths in Germany, and over 4,000 species including micro moths. Paul Bodesheim‘s team in the Computer Vision Group will integrate various forms of knowledge, such as biological taxonomy or size characteristics of moths, into machine learning processes in order to achieve a high degree of accuracy in species identification.

The AI models build on preliminary work on the identification of moth species in the AMMOD project. The species identifications made by the AI models are stored in a database and form the basis for comprehensive data analyses on biodiversity and changes in species diversity. In addition, the AI methods are intended to support the procurement of annotated training data by providing suggestions to users of the identification system. We also implement gamification approaches for playful annotation with AI support. The developments of the AI models in this project have a strong influence on basic research in the field of visual species identification. They are also important for the development of the monitoring database and data analysis on changes in species diversity.

Deep learning methods and artificial neural networks

The AI models developed use ‘deep learning’ methods of machine learning, which have been state-of-the-art in this field for over 10 years and account for the most of current AI research. When combined with large amounts of sample data for training a prediction model, these learning methods enable enormous capabilities for demanding tasks in the areas of image and speech recognition. This is also reflected in a large number of “intelligent” apps that almost all of us use consciously or unconsciously when using smartphones today. The basis for this is formed by artificial neural networks, which are capable of extracting information from images or texts by chaining together a large number of mathematical calculations. An image is represented by a set of numbers (color values of each pixel) and serves as input for the calculations of the mathematical functions represented by the neural network. Each individual function can be described by parameters whose values are determined using huge image data sets and assigned labels (e.g., manually determined insect species). In addition to the large amounts of training data, modern hardware and computing clusters equipped with powerful graphics cards that can perform multiple calculations efficiently and in parallel also contribute to learning success.

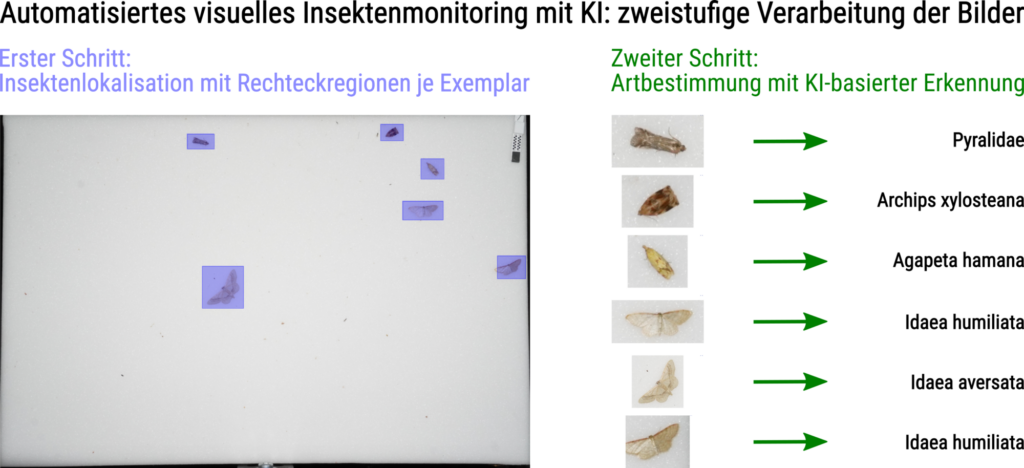

Insect localization in the image

The first step in the automated processing of camera trap images is the localization of individual insects. This involves determining the position of each specimen in the image and recording its approximate size. Both are represented by bounding boxes and determined by the computer in the form of a foreground-background distinction, whereby the insects are defined as foreground objects and everything else as background. Since other “objects” may also be present in the image alongside the insects (e.g., a scale for size conversion in one corner of the image, or dirt on the screen surface caused by wind and weather), in addition to the application of image processing and computer vision algorithms, the use of AI is also necessary so that the system can distinguish insects from non-insects (such as scales, leaves, or dirt). The rectangular regions have the advantage that these image sections can subsequently be processed as smaller sub-images or individual images, for which a clear species assignment can be made. By considering the localization of insects in the image and species identification separately, different AI models can be optimized independently for these two different tasks.

Species identification with AI

For the visual identification of an insect based on a single image (or part of an image from a camera trap image), artificial neural networks are used to extract characteristic features that numerically describe, for example, the coloration and texture of the wings using numerical values. Based on these values, classification methods of pattern recognition and machine learning are then used to assign the insect to a category.

Knowledge integration in AI models and learning processes

The LEPMON project aims to integrate various forms of additional knowledge, known as expert or domain knowledge, into the AI models in order to further improve species identification and not just learn from the “pure” pixel constellations in the image.

Shape and symmetry characteristics

Moths, for example, differ greatly in the shape of their wings, so that certain species can already be ruled out based on the silhouette of an animal. If the front and hind wings are spread, the shape is somewhat like the letter “V”; if the wings are folded backwards, it is more like the letter “A.” These distinguishing criteria should be explicitly integrated into the AI models. In addition, other studies have shown that certain species are more likely to sit on the screen surface with their wings folded upwards, while other species always rest with their wings spread. This also leads to different outlines and contours, which can be taken into account in automated identification.

Size information and measurable properties

Since all images captured with ARNI include a scale, appropriate image processing methods can be used to automatically convert pixels into units of length (mm, cm), allowing the absolute sizes of the insects to be extracted from the images. Without a scale, this would not be possible due to varying camera distances, zoom levels, and image resolutions. Since each species covers only a certain size range in its adult stage, certain species can also be excluded from based purely on the determined sizes of the animals. This can also contribute to improving the quality of species identification and enable robust identification algorithms.

Taxonomy of insects

In entomology, it is well known that not all insects can be clearly identified based solely on visual image characteristics and photographs. For some species, DNA analysis or morphological identification of internal structures are necessary. AI must be able to deal with this uncertainty in identification and, in the case of unresolvable ambiguities and clues that point to several species as candidates, deliver a meaningful output that is useful for subsequent analysis. In such cases, the AI should return the genus or family rather than the species name, i.e., an identification at a higher taxonomic level. To this end, the underlying taxonomy of insects and the tree-like, graphical dependencies between families, genera, and species will be integrated into the AI models.